Chapter 12 Penalized Regression Methods

Building on the foundational concepts introduced in previous chapters, it is important to recognize that while the Ordinary Least Squares (OLS) method-discussed in Chapter 2 and detailed in Chapter 4—offers a robust framework for statistical inference by minimizing the sum of squared residuals and providing the Best Linear Unbiased Estimator (BLUE) under the Gauss-Markov assumptions, it may not always yield the best predictive performance in complex data scenarios. In the pursuit of flexible prediction or obtaining unbiased coefficients, we often transform variables in linear models into polynomials, create interaction terms, and apply various transformations to better capture complex relationships in the data. However, in the era of big data and complex models, traditional linear regression often falls short when faced with high-dimensional datasets where the number of predictors is large relative to the number of observations. In such scenarios, OLS estimates can become unstable, and exhibit high variance, leading to models that perform exceptionally well on training data but fail to generalize effectively due to overfitting , i.e unbiased coefficients but without any external validity. Moreover, multicollinearity among predictors—where high correlations make the design matrix nearly singular—can significantly inflate variance estimates and destabilize coefficient interpretations, undermining the model’s reliability. These challenges highlight the limitations of OLS in complex data settings and underscore the need for additional methodologies that can regularize the estimation process. By trading a small amount of bias for a significant reduction in variance, such methods can improve the model’s predictive accuracy without compromising the integrity of its predictions.

In fields such as economics, social sciences, or health sciences, it is common practice to include a wide array of socioeconomic background variables and personal information in regression models—such as age, region, occupation, industry, and various other indicators. Researchers often improve these models by adding quadratic and cubic terms, as well as interactions among these indicators, to better capture nonlinear relationships and complex interactions within the data. This approach can result in models with hundreds or even thousands of features (variables) in a single OLS regression. When utilizing common surveys, especially panel data, incorporating these extensive sets of variables can lead to the very challenges previously discussed: the number of predictors (or control variables) may approach or exceed the number of observations, and multicollinearity becomes more prevalent than it might initially appear. Consequently, OLS estimates become unstable, exhibiting high variances and unreliable coefficient interpretations, which undermines the model’s reliability and predictive performance. These issues further highlight the limitations of traditional OLS regression in complex data settings and underscore the need for alternative methodologies—such as penalized regression techniques—that can effectively address these challenges without compromising the integrity of the model’s predictions.

Penalized regression methods, which often surpass OLS in predictive accuracy, have emerged as powerful tools in statistical modeling. Also known as shrinkage or regularization methods, these techniques focus primarily on predicting unseen data by emphasizing the critical balance between bias and variance. Rather than striving solely for unbiased estimators—the BLUE in classical statistical terms—our goal shifts toward developing models that manage this balance effectively. In this chapter, we focus on how penalized regression methods is used for prediction, and walk through each step in detail—from raw data to final results. We explain how to prepare and standardize data, fit the lasso model across a grid of penalty values, use cross-validation to select the optimal penalty, and interpret the selected coefficients. In Chapter 19 and 22, we will later return to these methods in the context of causal inference, where it plays a key role in the double machine learning framework.

These methods are particularly adept at handling overfitting, a key concern when modeling complex datasets, as previously discussed. Penalized regression techniques intentionally adjust the regression coefficients to create slightly biased estimators if it results in a lower overall prediction error on new, unseen data. We explored the possibility of biased estimators as better predictors in the final section of Chapter 5, highlighting how accepting some bias can reduce variance and improve predictive performance.

The general principle of penalization in regression models involves adding a penalty term to the model’s objective function—usually the sum of squared errors—to constrain or shrink the estimated regression coefficients. This penalty discourages the model from fitting too closely to the training data, helping to avoid overfitting and manage multicollinearity. By shrinking the coefficients, the model effectively reduces their variance at the cost of introducing some bias, thereby striking a balance that improves prediction accuracy on unseen data.

In practice, penalization aims to control the complexity of the model. Regularization involves adding a penalty term to the loss function used in parameter estimation, which discourages complex models by shrinking coefficient estimates and effectively preventing overfitting. The general principle is to modify the traditional loss function by introducing a penalty that constrains the magnitude of the coefficients. This penalty term includes a regularization parameter that controls the strength of the penalty, allowing us to adjust how much we want to shrink the coefficients.

While the loss function can take various forms—such as means, quantiles, expectiles, or others depending on the modeling objective—and penalization techniques can be applied across different types of models including logistic regression, splines, tree-based methods, and neural networks, in this chapter we specifically focus on linear regression models with the MSPE as the loss function.

The general form of a regularized loss function in linear regression is:

\[\begin{equation} \min_{\beta} \left\{ L(\beta) = \sum_{i=1}^{n} \left( y_i - \beta_0 - \sum_{j=1}^{p} \beta_j x_{ij} \right)^2 + \lambda P(\beta) \right\} \end{equation}\]

where:

The choice of the penalty function distinguishes different regularization methods. L1 and L2 refer to two types of penalty functions used in regularization methods for regression models. Ridge Regression applies an L2 penalty, penalizing the sum of the squares of the coefficients. This approach shrinks the coefficients toward zero without fully eliminating any variables, leading to smaller and more stable estimates—particularly useful when dealing with multicollinearity. Lasso Regression employs an L1 penalty, penalizing the sum of the absolute values of the coefficients. This method not only shrinks coefficients but can also set some exactly to zero, effectively performing variable selection and simplifying the model. Elastic Net Regression combines both L1 and L2 penalties, blending the strengths of Ridge and Lasso using the mixing parameter, \(\alpha\). This technique is especially beneficial when dealing with highly correlated predictors, as it can handle multicollinearity while still enabling variable selection.

By selecting the appropriate penalized regression method and tuning its hyperparameters (e.g., \(\lambda\) and \(\alpha\)), we can control the trade-off between bias and variance, increase model interpretability, and improve predictive performance. Cross-validation is commonly used to determine the optimum values for these hyperparameters. In the sections that follow, we will examine each penalized regression method in detail—walking through every step from raw data to final results. Each method will be accompanied by a thorough explanation of what the code is doing and why.

You do not need to read or understand every part of the chapter. If your primary goal is implementation, you can focus on the simulation subsections under each method to learn how to apply the relevant packages directly. That’s essentially what most books and online sources provide. However, this chapter goes far beyond that: it explains the methods conceptually and practically in a way you won’t find in most other resources.

12.1 Ridge Regression

Ridge Regression is one of the earliest and most fundamental techniques in the family of penalized regression methods. It was introduced by Hoerl and Kennard in 1970 to address the problems of multicollinearity in linear regression models. When predictor variables are highly correlated, the design matrix becomes close to singular, and the Ordinary Least Squares (OLS) estimates exhibit high variance and instability. Ridge Regression addresses this challenge by incorporating a penalty term into the loss function, which effectively reduces the magnitude of the coefficient estimates toward zero without completely zeroing any of them. In Ridge regression, this penalty term helps in minimizing the weight of each predictor, thereby allocating greater significance to the most crucial predictors. This approach helps decrease the variance and consequently, the risk of overfitting. When the sample size is large, the Ridge estimators are nearly identical to the unbiased OLS estimators, which we will demonstrate below.

Consider a linear regression model with \(n\) observations and \(p\) predictors \(\mathbf{y}_{i} = \mathbf{X}_{i} \boldsymbol{\beta} + \boldsymbol{\varepsilon}_{i},\) where \(\mathbf{y} \in \mathbb{R}^n\) is the response vector, \(\mathbf{X} \in \mathbb{R}^{n \times p}\) is the design matrix of predictor variables, \(\boldsymbol{\beta} \in \mathbb{R}^p\) is the vector of coefficients to be estimated, \(\boldsymbol{\varepsilon} \in \mathbb{R}^n\) is the error term, assumed to be independently and identically distributed with mean zero and variance \(\sigma^2\).

In OLS regression, the estimates of \(\boldsymbol{\beta}\) are obtained by minimizing the residual sum of squares (RSS) as a loss function, \(\min_{\boldsymbol{\beta}} \sum_{i=1}^{n} \left( y_i - \beta_0 - \sum_{j=1}^{p} \beta_j x_{ij}\right)^2\).The OLS solution in matrix form is \(\hat{{\beta}}_{\text{OLS}} = ({X}^\top {X})^{-1} {X}^\top{y}\). In component form, \(\hat{\boldsymbol{\beta}}_{\text{OLS}}\) can be expressed as \(\hat{\beta}_{k,\text{OLS}} = \frac{\sum_{i=1}^{n} y_i x_{ik}}{\sum_{i=1}^{n} x_{ik}^2},\) as shown at the end of Chapter 5. In OLS, the variance of \(\hat{\boldsymbol{\beta}}\) is given by \(\text{Var}(\hat{\boldsymbol{\beta}}_{\text{OLS}}) = \sigma^2 (\mathbf{X}^\top \mathbf{X})^{-1},\) assuming homoscedastic errors. However, OLS coefficient and variance estimates can become unstable when dealing with multicollinearity or high-dimensional data (where \(p \approx n\) or \(p > n\)).

When multicollinearity is present, i.e. some of the predictors are highly correlated, meaning they carry nearly the same information. In this case, the columns of \(\mathbf{X}\) are almost linearly dependent, making it difficult to distinguish the effects of different predictors. This increases the variance of \(\hat{\boldsymbol{\beta}}\), meaning small changes in the data can lead to large changes in the estimates and predictions. Similarly, in high-dimensional data, predictions become unreliable because the matrix \(\mathbf{X}^\top \mathbf{X}\) becomes close to singular (non-invertible), which occurs when there isn’t enough data to provide a unique estimate for each predictor, as \(p > n\). Ridge Regression addresses these issues by adding a penalty term, referred as \(L2\), to the OLS loss function, which stabilizes the coefficient estimates and reduces their variance.

The objective function for Ridge regression is:

\[\begin{equation} \min_{\boldsymbol{\beta}} \left\{ L(\boldsymbol{\beta}) = \sum_{i=1}^{n} \left( y_i - \beta_0 - \sum_{j=1}^{p} \beta_j x_{ij} \right)^2 + \lambda \sum_{j=1}^{p} \beta_j^2 \right\}, \end{equation}\]

where \(\mathbf{y}_i\) represents the response variable, \(x_{ij}\) are the predictors, and \(\boldsymbol{\beta} = [\beta_0, \beta_1, \ldots, \beta_p]\) is the vector of coefficients. The regularization or tuning parameter \(\lambda \geq 0\) controls the strength of the penalty applied to the coefficients. The term \(\|\boldsymbol{\beta}\|_2^2 = \sum_{j=1}^{p} \beta_j^2\) represents the squared \(L2\) norm of the coefficient vector, which is the key component of Ridge regression’s penalty term.

The Ridge regression objective function can also be written in matrix form as:

\[\begin{equation} L(\boldsymbol{\beta}) = (\mathbf{y} - \mathbf{X} \boldsymbol{\beta})^\top (\mathbf{y} - \mathbf{X} \boldsymbol{\beta}) + \lambda \boldsymbol{\beta}^\top \boldsymbol{\beta} \end{equation}\]

where \(\mathbf{X}\) is the matrix of predictor variables, \(\mathbf{y}\) is the response vector, and \(\boldsymbol{\beta}\) is the coefficient vector. This matrix form compactly expresses the optimization problem that Ridge regression solves, while the summation form provides insight into the individual components of the solution.

The Ridge regression estimator for the coefficient \(\beta_k\) in summation notation is given by:

\[\begin{equation} \hat{\beta}_{k,\text{Ridge}} = \frac{\sum_{i=1}^{n} y_i x_{ik}}{\sum_{i=1}^{n} x_{ik}^2 + \lambda} \end{equation}\]

For all coefficients \(k = 1, \dots, p\), the solution in matrix form is \(\hat{\boldsymbol{\beta}}_{\text{Ridge}} = (\mathbf{X}^\top \mathbf{X} + \lambda \mathbf{I})^{-1} \mathbf{X}^\top \mathbf{y}.\)

The matrix formulation provides a general solution for all coefficients simultaneously, while the summation form gives more insight into how Ridge regression operates on individual predictors.

The Ridge regression coefficient for the \(k\)-th predictor can also be expressed in terms of the OLS coefficient as:

\[ \hat{\beta}_{k,\text{Ridge}} = \frac{\hat{\beta}_{k,\text{OLS}}}{1 + \frac{\lambda}{\sum_{i=1}^{n} x_{ik}^2}} \]

(Most texts provide the following relationship between OLS and Ridge coefficients. Orthogonality is beneficial but not necessary for either OLS or Ridge regression. In the context of Ridge regression, its primary advantage is that it simplifies the formula and interpretation of the results.

For orthogonal covariates, we assume each predictor \(x_i\) is independent and has an equal sum of squares across observations, \(\sum_{j=1}^n x_{ji}^2 = n\) for each predictor \(i\). Also, the cross-product of different predictors equals zero, \(\sum_{j=1}^n x_{ji} x_{jk} = 0\) for all \(i \neq k\).

We can express the Ridge estimator in terms of the OLS estimator:

\[\begin{equation} \hat{\beta}_{k,\text{Ridge}} = \frac{n}{n + \lambda} \hat{\beta}_{k,\text{OLS}} \end{equation}\]

In matrix form, For orthogonal covariates, the matrix \(X^\top X\) is a diagonal matrix where each diagonal element is equal to \(n\) (the number of observations), assuming the predictors are standardized ( which we discuss next). Thus\(X^\top X = nI\). Substituting in the formula for Ridge regression, we get \(\hat{\beta}_{\text{Ridge}} = \frac{n}{n+\lambda} \hat{\beta}_{\text{OLS}}\).

The simplification \(\frac{n}{n + \lambda}\) serves as a good approximation and conceptual aid to understand how regularization impacts coefficient estimates, particularly emphasizing uniform shrinkage in a broad sense. In practice, Ridge is particularly useful in scenarios where predictors are highly correlated or when the number of predictors is large relative to the number of observations, conditions under which OLS might perform poorly due to high variance or non-invertibility of \(X^\top X = nI\). All derivations are at the end of the chapter.)

In the OLS solution, the denominator consists only of the sum of squares of the predictor values for \(x_{ik}\). In Ridge regression, however, the regularization term \(\lambda\) is added to this denominator, which effectively shrinks the coefficient \(\hat{\beta}_{k,\text{Ridge}}\) compared to \(\hat{\beta}_{k,\text{OLS}}\). This expression shows that Ridge regression shrinks the OLS coefficient by a factor that depends on both the regularization parameter \(\lambda\) and the variability of the predictor \(x_{ik}\).When \(\lambda = 0\), the Ridge regression solution reduces to the OLS solution, meaning \(\hat{\beta}_{k,\text{Ridge}} = \hat{\beta}_{k,\text{OLS}}\). As \(\lambda\) increases, the Ridge coefficient \(\hat{\beta}_{k,\text{Ridge}}\) becomes smaller, or more shrunk, compared to \(\hat{\beta}_{k,\text{OLS}}\). Thus, these estimates called shrinkage estimators, which are biased but have lower variance than OLS estimates.

The purpose of this shrinkage is to stabilize the coefficient estimates by reducing their variance. This makes the model more robust to multicollinearity and high-dimensional data, where OLS can become unstable. By controlling the impact of large or highly correlated predictors, Ridge regression helps to reduce overfitting and improve the model’s performance on unseen data.

Similar to all mean squared prediction errors, The MSPE of the Ridge estimator can also be decomposed into the sum of the squared bias and the variance as \(\text{MSE}(\hat{\boldsymbol{\beta}}_{\text{Ridge}}) = \text{Bias}(\hat{\boldsymbol{\beta}}_{\text{Ridge}})^2 + \text{Var}(\hat{\boldsymbol{\beta}}_{\text{Ridge}}).\)

The bias and variance of the Ridge estimator can be derived both in summation form and matrix form, and these expressions help us understand how regularization affects the estimates. To compare the MSE of Ridge regression and OLS, recall that the bias and variance for OLS were discussed at the end of Chapter 5.

The variance of the Ridge estimator in summation notation for the \(k\)-th coefficient is:

\[\begin{equation} \text{Var}(\hat{\beta}_{k,\text{Ridge}}) = \frac{\sigma^2 \sum_{i=1}^{n} x_{ik}^2}{\left( \sum_{i=1}^{n} x_{ik}^2 + \lambda \right)^2} \end{equation}\]

This shows that Ridge regression reduces or at least maintains the variance compared to OLS, thanks to the regularization term \(\lambda\), which controls the shrinkage of the coefficients. The variance of the Ridge estimator is less than or equal to the variance of the OLS estimator, which can be formally written as \(\text{Var}(\hat{\beta}_{k,\text{Ridge}}) \leq \text{Var}(\hat{\beta}_{k,\text{OLS}}).\)

On the other hand, the bias introduced by Ridge regression in summation form is:

\[\begin{equation} \text{Bias}(\hat{\beta}_{k,\text{Ridge}}) = -\beta_k \frac{\lambda}{\sum_{i=1}^{n} x_{ik}^2 + \lambda} \end{equation}\]

This bias arises because Ridge regression shrinks the coefficients toward zero, deviating from the true coefficient values. As \(\lambda\) increases, the bias increases because the model penalizes the size of the coefficients more aggressively, i.e. the coefficients are shrunk more toward zero.

In matrix form, the variance of the Ridge estimator is \(\text{Var}(\hat{\boldsymbol{\beta}}_{\text{Ridge}}) = \sigma^2 (\mathbf{X}^\top \mathbf{X} + \lambda \mathbf{I})^{-1} \mathbf{X}^\top \mathbf{X} (\mathbf{X}^\top \mathbf{X} + \lambda \mathbf{I})^{-1}]\). Also, in matrix form, the bias introduced by Ridge regression is expressed as \(\text{Bias}(\hat{\boldsymbol{\beta}}_{\text{Ridge}}) = -\lambda (\mathbf{X}^\top \mathbf{X} + \lambda \mathbf{I})^{-1} \boldsymbol{\beta}.\)

Both forms show that Ridge regression trades off a small amount of bias for a significant reduction in variance. The key to minimizing the MSE lies in finding the appropriate \(\lambda\), as Ridge regression reduces variance at the cost of introducing bias, but this can lead to improved prediction performance compared to OLS, particularly when dealing with multicollinearity or high-dimensional data.

When \(\lambda = 0\), Ridge regression reduces to OLS, and both MSEs are the same because the bias is zero, and the variance matches that of OLS. However, when \(\lambda > 0\), Ridge regression introduces bias but compensates by reducing the variance, thanks to the shrinkage of the coefficients.

For small values of \(\lambda\), the bias is minimal, and Ridge regression behaves similarly to OLS. In this scenario, Ridge regression manages to reduce the variance slightly without introducing much bias. As a result, the MSE of Ridge can be lower than that of OLS, meaning Ridge regression is able to outperform OLS by stabilizing the coefficient estimates while maintaining low bias. On the other hand, when \(\lambda\) is large, the bias introduced by Ridge regression increases substantially as the model shrinks the coefficients more aggressively. Although the variance decreases with larger \(\lambda\), the overall MSE can increase due to the growing bias. In such cases, Ridge regression may underfit the data, and its MSE can become larger than that of OLS because the model is too biased to capture the true relationships in the data.

In Ridge regression, for it to have a lower MSE than OLS, the regularization parameter \(\lambda\) must satisfy the inequality:

\[\begin{equation} \lambda < \frac{2 \sigma^2 \sum_{i=1}^{n} x_{ik}^2}{\beta_k^2 \sum_{i=1}^{n} x_{ik}^2 - \sigma^2} \end{equation}\]

This inequality provides a threshold for \(\lambda\). If \(\lambda\) is below this threshold, Ridge regression will outperform OLS in terms of MSE. However, if \(\lambda\) exceeds this value, the bias introduced by Ridge regression becomes too large, and the OLS estimator will have a lower MSE.

While this formula offers valuable theoretical insight, calculating the exact value of \(\lambda\) in practice is difficult. This is primarily because the formula depends on the true coefficient values \(\beta_k\) and the variance of the error term \(\sigma^2\), both of which are unknown in real-world scenarios. The whole purpose of regression is to estimate the coefficients \(\beta_k\), and we don’t have access to their true values in practice. Similarly, the true variance of the error term \(\sigma^2\) is also unknown and, while it can be estimated, this estimation introduces additional uncertainty. Therefore, because \(\beta_k\) and \(\sigma^2\) are unknown, the formula for the optimal \(\lambda\) cannot be computed exactly, making the direct calculation of \(\lambda\) impractical in real-world settings.

Even if we were able to approximate \(\beta_k\) and \(\sigma^2\), the theoretical formula for \(\lambda\) is based on several simplifying assumptions, such as independent and identically distributed (i.i.d.) errors, linearity, and no model misspecification. In real-world datasets, the relationships between variables are often more complex than the assumptions of the model. There may be nonlinearities, interactions, multicollinearity, or other complexities that affect model performance. Furthermore, the noise distribution may not follow the standard assumptions of homoscedasticity or normality. These complexities mean that the theoretically derived \(\lambda\) might not generalize well to the specific characteristics of the dataset being analyzed.

Cross-validation is a practical, data-driven method for finding \(\lambda\) because it directly tests how different values of \(\lambda\) perform on unseen data, without relying on theoretical assumptions about the true model or unknown parameters like \(\beta_k\) and \(\sigma^2\). While the theoretical formula for \(\lambda\) is valuable for understanding the trade-off between bias and variance, cross-validation is the preferred method in real-world applications. It avoids the need for unknown parameters, accounts for complexities in the data, and directly optimizes the model’s performance on unseen data, making it the go-to approach for tuning the \(\lambda\) hyperparameter in Ridge regression.

In addition to cross-validation, there are several other methods that can be used to select the regularization parameter \(\lambda\) in Ridge regression. While cross-validation remains the most commonly used method due to its flexibility and robustness, alternatives like bootstrap or analytical approaches such as generalized cross-validation, AIC/BIC, SURE, empirical bayes, and the L-curve method also offer ways to estimate the performance of different \(\lambda\) values in real-world scenarios. These methods may be preferable depending on the context, computational considerations, or available prior information (such as knowledge of noise variance). Each method has its advantages and trade-offs, and the choice depends on the specific characteristics of your data and modeling needs.

In summary, Ridge regression’s performance is determined by balancing bias and variance. When \(\lambda\) is small, Ridge regression performs similarly to OLS but with lower variance, making it advantageous in the presence of multicollinearity. However, when \(\lambda\) becomes too large, the bias increases, and the MSE may eventually surpass that of OLS. Therefore, selecting the optimal \(\lambda\), often through cross-validation, is essential for minimizing the MSE by finding the right balance between bias and variance.

The L2 penalty in Ridge regression shrinks the coefficients toward zero without setting any of them exactly to zero. This shrinkage stabilizes the coefficient estimates, reducing variance and improving predictive performance on new, unseen data. Ridge regression does not perform variable selection, unlike Lasso, but its ability to handle multicollinearity makes it a powerful tool for dealing with high-dimensional data.

12.1.1 Standardization of Predictors

It is important to consider the role of standardization when applying regularization. In practice, predictor variables \(\mathbf{X}\) are often on different scales, which can cause larger-scaled variables to dominate the regularization penalty, leading to inconsistent shrinkage across coefficients. Regularization penalizes models for large coefficients, and the magnitude of these coefficients depends on two key factors: the strength of the relationship between the input variable \(x\) and the output variable \(y\), and the units of measurement of \(x\). For instance, if a predictor represents weight and is measured in grams, its coefficient will differ significantly compared to when the same variable is measured in kilograms. This is because the coefficient must adjust to reflect the unit of measurement, inflating or deflating accordingly.

To ensure that the regularization focuses on the strength of the relationship between predictors and the response variable, rather than the units of measurement, it is essential to standardize the features. Standardization involves transforming the variables to have a mean of 0 and a standard deviation of 1. This adjustment ensures that the regularization penalty applies equally across all predictors, allowing the coefficients to reflect only the importance of each predictor in predicting \(y\).

Without standardization, larger-scaled variables could receive a disproportionately large penalty, distorting the model’s performance. Standardizing the data allows Ridge regression to apply the same regularization parameter \(\lambda\) across different datasets, irrespective of their scales or sample sizes, leading to more stable and interpretable results. The test and validation sets should also be standardized using the mean and standard deviation derived from the training set to avoid data leakage and ensure consistency during evaluation. Standardizing the predictors does not alter the fundamental relationship between Ridge and OLS estimates in terms of their mathematical form. However, it simplifies the calculation and interpretation by ensuring that each predictor contributes equally to the penalty term, thereby standardizing the effect of regularization across all predictors.

In Ridge regression, it is customary to standardize predictor variables before fitting the model. This is done by transforming each predictor as follows:

\[\begin{equation} x_{ij}^{\text{std}} = \frac{x_{ij} - \bar{x}_j}{s_j} \end{equation}\]

where \(\bar{x}_j\) is the mean of the \(j\)-th predictor across all \(n\) observations, and \(s_j = \sqrt{\frac{1}{n} \sum_{i=1}^{n} (x_{ij} - \bar{x}_j)^2}\) is the standard deviation of the \(j\)-th predictor. This process ensures that the regularization penalty is applied consistently across all predictors, making the model’s coefficients comparable and independent of the variables’ units. Standardization thus plays a crucial role in ensuring the fairness and effectiveness of the regularization process, allowing Ridge regression to focus on the predictive strength of each variable rather than its scale.

12.1.2 Implementation and Simulation of Ridge Regression

To begin the Ridge regression simulation, the first step is Data Preparation. This involves centering and standardizing the predictor variables to ensure that all features are on the same scale, allowing the Ridge penalty to be applied consistently across them. Additionally, if the intercept term is omitted from the model, the response variable should also be centered to maintain consistency with the standardized predictors.

Next is the Parameter Selection process. Here, we select a grid of \(\lambda\) values to test. To find the optimal \(\lambda\), \(k\)-fold cross-validation is employed. This method evaluates the model’s performance for each value of \(\lambda\) by dividing the data into training and validation sets multiple times. The value of \(\lambda\) that minimizes the cross-validation error is chosen as the optimal regularization parameter.

Once the optimal \(\lambda\) has been determined, the Model Fitting step begins. Using the selected \(\lambda\), the Ridge regression coefficients are computed, applying the regularization penalty that shrinks the coefficients toward zero without setting any of them exactly to zero.

Finally, in the Model Evaluation phase, the predictive performance of the Ridge regression model is assessed. This can be done by evaluating its performance on a separate test set or by using additional cross-validation. The goal is to compare the Ridge regression model’s ability to generalize to new data, particularly in relation to an OLS model.

In the following Ridge regression simulation in R, we generate synthetic data, fit a Ridge regression model using the glmnet package, and then evaluate its performance by comparing it to OLS results. This step-by-step approach demonstrates how Ridge regression can be applied in practice to address multicollinearity and improve predictive accuracy.

# Load necessary libraries

library(glmnet)

# 1. Generate synthetic data

set.seed(123) # For reproducibility

n <- 100 # Number of observations

p <- 10 # Number of predictors

# Create a matrix of independent variables (X) and response variable (y)

X <- matrix(rnorm(n * p), nrow = n, ncol = p) # Random normal data for predictors

beta <- rnorm(p) # Random coefficients

y <- X %*% beta + rnorm(n) # Linear relationship with noise

# 2. Split data into training and testing sets

set.seed(456)

train_idx <- sample(1:n, size = 0.8 * n, replace = FALSE)

X_train <- X[train_idx, ]

X_test <- X[-train_idx, ]

y_train <- y[train_idx]

y_test <- y[-train_idx]

# 3. Fit a Ridge regression model

# Ridge regression with alpha = 0 (L2 regularization)

ridge_model <- glmnet(X_train, y_train, alpha = 0)

# 4. Cross-validation to find the best lambda

cv_ridge <- cv.glmnet(X_train, y_train, alpha = 0)

# 5. Get the best lambda value

best_lambda <- cv_ridge$lambda.min

cat("Best lambda for Ridge regression:", best_lambda, "\n")## Best lambda for Ridge regression: 0.2529632# 6. Fit the final Ridge model with the best lambda

ridge_final <- glmnet(X_train, y_train, alpha = 0, lambda = best_lambda)

# 7. Make predictions on the test set

y_pred_ridge <- predict(ridge_final, s = best_lambda, newx = X_test)

# 8. Evaluate the model performance

mse_ridge <- mean((y_test - y_pred_ridge)^2)

cat("Mean Squared Error for Ridge regression on test set:", mse_ridge, "\n")## Mean Squared Error for Ridge regression on test set: 2.315453# 9. Compare with Ordinary Least Squares (OLS)

ols_model <- lm(y_train ~ X_train)

y_pred_ols <- predict(ols_model, newdata = data.frame(X_train = X_test))

# Mean Squared Error for OLS

mse_ols <- mean((y_test - y_pred_ols)^2)

cat("Mean Squared Error for OLS on test set:", mse_ols, "\n")## Mean Squared Error for OLS on test set: 34.04085

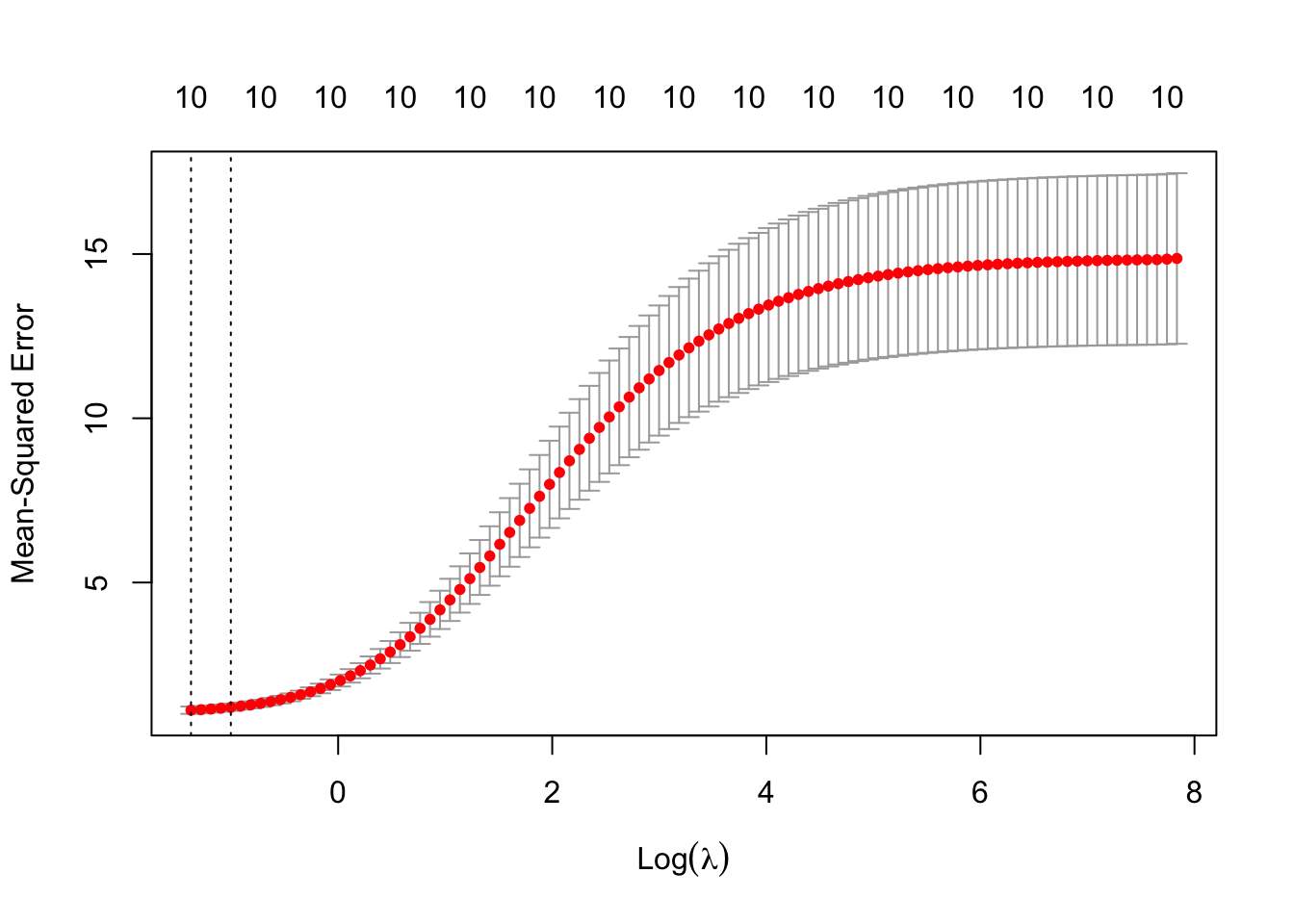

FIGURE 12.1: Cross-validation results for Ridge regression: optimal lambda selection

In this simulation, we demonstrate how Ridge regression works using synthetic data. The steps involved include generating data, fitting the Ridge model, selecting the optimal regularization parameter \(\lambda\) via cross-validation, and evaluating the model’s performance. The code uses the glmnet package in R, which is commonly employed for fitting generalized linear models, including Ridge regression (L2 regularization) and Lasso regression (L1 regularization). Let’s break down each step of the simulation:

Data Generation:

We begin by generating synthetic data. In this example, we create a dataset with 100 observations (rows) and 10 predictors (columns). The predictors are generated randomly from a normal distribution, and the response variableyis created as a linear combination of these predictors, with some additional random noise added to simulate real-world conditions where data is rarely perfectly linear. This ensures that the dataset mimics a scenario with some inherent variability and noise.Train-Test Split:

After generating the data, the dataset is split into two parts: 80% of the data is used for training the model, and 20% is held out as a test set. This is done to assess how well the model generalizes to unseen data. The training set is used to fit the Ridge regression model, and the test set is reserved for evaluating the model’s performance later.Fitting Ridge Regression:

Ridge regression is fitted using theglmnet()function from theglmnetpackage. Theglmnet()function is highly flexible and allows the user to fit both Ridge and Lasso models by adjusting thealphaparameter. For Ridge regression, we setalpha = 0, which indicates that L2 regularization (Ridge) is being applied. The Ridge regression model introduces a penalty for large coefficients, shrinking them toward zero to prevent overfitting, especially in cases where multicollinearity is present among predictors.Cross-Validation for \(\lambda\) Selection:

To determine the optimal value of \(\lambda\), the regularization parameter, we use thecv.glmnet()function, which performs 10-fold cross-validation. Cross-validation is a technique where the training data is divided into 10 parts, and the model is trained on 9 parts while the remaining 1 part is used for validation. This process is repeated 10 times, and the average performance across all folds is calculated. The value of \(\lambda\) that minimizes the cross-validation error is selected as the best \(\lambda\). This step is crucial because it ensures that we choose the level of regularization that best balances bias and variance.Final Ridge Model with Best \(\lambda\):

Once the best \(\lambda\) value is identified through cross-validation, we fit the final Ridge regression model using this optimal \(\lambda\) value. Theglmnet()function is called again, this time with the best \(\lambda\), to compute the Ridge coefficients that minimize the loss function (sum of squared errors) while applying the chosen regularization penalty.Model Evaluation:

To evaluate the performance of the Ridge regression model, we use the test set that was set aside earlier. Predictions are made on the test data using the fitted Ridge model, and the Mean Squared Error (MSE) is calculated to assess the model’s accuracy. The MSE measures the average squared difference between the actual and predicted values, providing an indicator of how well the model generalizes to unseen data. The lower the MSE, the better the model is at predicting new data.Comparison with OLS:

For comparison, an OLS regression model is also fitted using thelm()function. The OLS model does not apply any regularization, so it may overfit the data, especially when multicollinearity is present. The MSE of the OLS model is computed on the same test set, allowing us to directly compare the predictive performance of Ridge regression with OLS. Since Ridge regression shrinks the coefficients, it typically yields a lower MSE on the test set compared to OLS, especially when multicollinearity or high-dimensional data is present.Visualization of Cross-Validation Results:

Finally, the results of the cross-validation process are visualized using a plot. The plot displays the cross-validation errors for different values of \(\lambda\), highlighting the best \(\lambda\) that minimizes the error. This graphical representation helps illustrate how different levels of regularization affect the model’s performance. In addition, numbers shown above the vertical bars indicate the number of nonzero coefficients in the model for each \(\lambda\) value. For ridge regression, these numbers are typically constant (e.g., “10”) because ridge does not set coefficients exactly to zero—it shrinks them continuously.

At the end of the simulation, it’s important to note that the grid of \(\lambda\) values used in Ridge regression plays a crucial role in determining the model’s performance. By default, the glmnet package selects around 100 values of \(\lambda\), typically ranging from a very large value to a very small one. The largest \(\lambda\) value is chosen so that all coefficients are shrunk very close to zero, meaning the model is almost entirely regularized. The smallest value of \(\lambda\) approaches zero, meaning the regularization effect is minimal, and the model behaves similarly to OLS. This ensures that the model is evaluated across a broad spectrum of regularization strengths. Because of this wide range, the figure above presents \(\log(\lambda)\) on the x-axis to make the range of \(\lambda\) values easier to visualize.

While the default grid works well in most cases, you may want to customize the grid of \(\lambda\) values using the lambda argument in the glmnet() or cv.glmnet() function for finer control. Customizing the grid can be useful if you have prior knowledge about the scale of regularization that is appropriate for your data, if you want to explore a finer or coarser set of \(\lambda\) values, or if the default grid does not cover the range of \(\lambda\) values that interests you. By exploring different values of \(\lambda\), Ridge regression is able to balance bias and variance, optimizing the model’s predictive accuracy. Here’s how you can customize the \(\lambda\) grid at step 3 above:

To further analyze the results of the simulation, Ridge regression coefficients can be extracted and compared to OLS coefficients. This can be added in step 6 of the simulation for Ridge regression and in step 9 for OLS. By using the coef() function in R, we can present the Ridge coefficients for the selected \(\lambda\), and then compare them to the OLS coefficients.

# Step 6: Extract Ridge regression coefficients for the best lambda

ridge_coefs <- coef(ridge_final)

print(ridge_coefs)

# Step 9: Extract OLS coefficients

ols_coefs <- coef(ols_model)

print(ols_coefs)Ridge regression coefficients can be interpreted similarly to OLS coefficients, in that they represent the impact of each predictor on the response variable. However, the Ridge regression coefficients are typically smaller than the OLS coefficients due to the regularization penalty, which shrinks the coefficients to reduce overfitting and stabilize the model in the presence of multicollinearity. Unlike OLS, where large coefficients can arise when predictors are highly correlated, Ridge introduces a bias but reduces variance, resulting in more stable and reliable estimates. The Ridge model retains all predictors, but their influence is diminished based on the strength of the regularization parameter \(\lambda\). This provides a better balance between bias and variance, leading to improved predictive performance on new, unseen data compared to OLS.

In summary, this simulation demonstrates the use of Ridge regression for handling multicollinearity and improving model generalization. The glmnet package provides an efficient and user-friendly way to apply Ridge regression, select the optimal regularization parameter via cross-validation, and evaluate model performance. Ridge regression is particularly useful when predictors are highly correlated or when dealing with high-dimensional datasets, as it shrinks the coefficients to prevent overfitting while maintaining good predictive accuracy.

Ridge regression is widely implemented in various statistical software packages, including R, Python, and Stata. In R, the glmnet package is commonly used for Ridge regression, providing a flexible and efficient framework for applying Ridge and Lasso regression. In Python, the scikit-learn library includes Ridge regression as part of its suite of linear models, making it easy to apply and experiment with regularization techniques. Additionally, Stata offers Ridge regression functionality through specifying \(\alpha=0\) while using the elasticnet command, allowing users to fit models with L2 regularization within the familiar Stata environment. These platforms provide robust tools for applying Ridge regression, making it accessible and practical for a wide range of data analysis tasks.

Ridge regression can also be extended to other types of models, such as Generalized Linear Models (GLMs). By modifying the loss function to account for different response variables, Ridge regression can be applied to logistic regression for binary outcomes, among other models. Additionally, Ridge regression has a Bayesian interpretation. In this view, the coefficients have a Gaussian prior distribution with mean zero and variance proportional to \(1/\lambda\), expressed as \(\beta_j \sim \mathcal{N}\left( 0, \frac{\sigma^2}{\lambda} \right)\). This Bayesian perspective adds another layer of understanding to how the regularization in Ridge regression works, as it can be seen as imposing a prior belief that the coefficients should be small unless there is strong evidence from the data to support larger values.

Ridge Regression is a powerful tool for addressing multicollinearity and improving the predictive performance of linear models by introducing a penalty term that balances the bias-variance trade-off. Its ability to provide more stable coefficient estimates makes it particularly useful for handling datasets with many correlated predictors. Although Ridge regression does not perform variable selection, it effectively reduces variance in exchange for a slight bias, which often leads to better generalization to new data. Its computational efficiency, even with large datasets, is another key advantage. However, the lack of variable selection can make interpretation more challenging, as all predictors remain in the model, albeit with shrunk coefficients. Despite this limitation, Ridge regression remains an essential technique for statisticians and data scientists working with complex, high-dimensional datasets.

12.2 Lasso Regression

Lasso Regression, short for Least Absolute Shrinkage and Selection Operator, improves traditional linear regression by incorporating a penalty term proportional to the absolute value of the coefficients. The primary purpose of Lasso is to encourage sparsity in the model, i.e., to shrink some coefficients to exactly zero, effectively performing variable selection. Sparsity, in this context, refers to the situation where a significant portion of the coefficients in a model are zero or near-zero, effectively reducing the complexity of the model and focusing on fewer, more significant variables. This concept is particularly useful in high-dimensional datasets — where the number of predictors p exceeds the number of observations n—, which often contain many predictors that are not essential for accurate predictions. Lasso’s ability to induce sparsity helps in variable (feature) selection by retaining only the most relevant predictors, thus simplifying the model and improving interpretability.

We want to emphasize some basic facts about Lasso solutions. There is either a unique Lasso solution or an (uncountably) infinite number of solutions, highlighting the potential for multiple equivalent models especially in scenarios with high-dimensional data. Solutions is not unique unless rank(X) = p. This condition, rank(X) = p, means that all the columns (predictors) in the matrix X are linearly independent, with no column being a linear combination of any others, which is crucial for ensuring a unique solution in traditional regression analysis. When this condition is met, the matrix X has full column rank, and the matrix \(X^\top X\) is invertible, a necessary condition for the uniqueness.

However, regardless of the number of solutions, each one will predict the same outcomes, ensuring that predictions are consistent across different models. If the regularization parameter, often symbolized by lambda, is greater than zero, all solutions will have the same total amount of shrinkage applied across their coefficients. This ensures that the predictions remain consistent across different models, even if the specific coefficients differ.These properties highlight the complexities inherent in interpreting Lasso results, particularly in cases with many predictors.19

One important consideration is how Lasso selects the best-fitting linear combinations of predictors, which may not necessarily correspond to the best individual predictors. This distinction is particularly important when variables are correlated, as is often the case in observational studies in economics, health, and social sciences, where potential predictors/regressors include powers and interactions of underlying variables. In such scenarios, Lasso prioritizes selecting the optimal combination of predictors rather than isolating the best individual predictors. This nuance is essential when interpreting Lasso results, as the exact variables chosen can vary across repeated samples or different data partitions in k-fold cross-validation.

It’s crucial to understand that variable selection in Lasso regression can be approached from two perspectives: identifying the set of true relevant covariates for statistical inference, or using dimension reduction to improve predictive accuracy without necessarily capturing the true model. The former is known as the oracle property, where the Lasso estimator consistently selects the true model as the sample size increases. The latter focuses on the model’s predictive performance, where the goal is to minimize the prediction error without necessarily identifying the true underlying model. The Lasso does not typically satisfy the oracle property on its own. To address the limitations of LASSO and achieve the oracle property, modifications to the basic Lasso, such as the Adaptive Lasso, which is discussed next section, have been proposed. Both perspectives are essential in understanding the role of Lasso regression in practice.20

Major limitation of the LASSO’s penalization process is its bias relative to OLS, which we will discuss further below. To address this, a two-step procedure known as ‘Double LASSO’ is often employed. Initially, LASSO regression selects variables by shrinking some coefficients to zero, focusing on the most relevant predictors and simplifying the model. In the second step, an OLS regression is performed on the selected predictors, providing unbiased estimates since it only involves variables deemed significant in the first step, thereby avoiding the multicollinearity typically seen with larger sets. This method, which balances variable selection with precise estimation, will be discussed further in the next chapter. Overall, LASSO is widely used for variable selection in statistical inference within economics, social sciences, and health sciences, though it is less commonly used for pure prediction due to the availability of more effective predictive models.

Let’s now examine the foundational aspects of Lasso itself. The Lasso objective function is similar to Ridge regression but uses the \(L1\) norm of the coefficients instead of the \(L2\) norm. The Lasso loss function can be written as:

\[\begin{equation} \min_{\boldsymbol{\beta}} \left\{ L(\boldsymbol{\beta}) = \sum_{i=1}^{n} \left( y_i - \beta_0 - \sum_{j=1}^{p} \beta_j x_{ij} \right)^2 + \lambda \sum_{j=1}^{p} |\beta_j| \right\} \end{equation}\] where \(y_i\) are the response variable, \(x_{ij}\) are the predictor variables, \(\beta_j\) are the coefficients to be estimated. \(\lambda\) is the regularization/shrinkage parameter that controls the strength of the Lasso penalty. The term \(\sum_{j=1}^{p} |\beta_j|\) is the \(L1\) norm, which shrinks some coefficients to be exactly zero.

This optimization problem is equivalent to minimizing the sum of squares subject to the constraint \(\sum_{j=1}^{p} |\beta_j| \leq s\), where higher values of \(s\) correspond to lower values of \(\lambda\). When \(p = 2\), this constraint becomes \(|\beta_1| + |\beta_2| \leq s\), which defines the diamond-shaped region commonly illustrated in Lasso figures, such as those in Tibshirani’s book or notes widely used to explain Lasso.

The Lasso optimization function is also presented with different scaling factors like \(1/2\), \(1/n\), or \(1/2n\) in front of the loss term. These factors simply adjust the relative scaling of the residual sum of squares term, but the solutions to the optimization problem remain equivalent because the penalty term is unaffected. After standardizing predictors (i.e., centering each predictor to have mean 0 and scaling it to have unit variance), the version of the LASSO optimization problem scaled by \(1/2n\) is generally preferred for its mathematical consistency and practical interpretability. All these variations are equivalent, and the function is convex, which guarantees that it always has at least one solution. However, if \(p > n\), there may be multiple minimums (see Tibshirani, 2013, or Schneider & Tardivel, 2020, for more details).

For a tuning parameter \(\lambda \geq 0\) , solving the Lasso optimization problem produces sparse solutions, meaning that some coefficients are exactly zero. Larger values of \(\lambda\) result in sparser solutions. Sparsity is desirable for two key reasons: first, it performs variable selection in the fitted linear model, offering interpretability by identifying which features are most important; second, it often improves prediction when the underlying regression function can be well-approximated by a sparse linear model.

It is common to standardize the predictors before fitting a LASSO regression model to ensure that the regularization penalty is applied uniformly across all variables. Standardizing predictors is a crucial step when applying LASSO because the penalty term \(\lambda \sum_{j=1}^p |\beta_j|\) treats all predictors equally, regardless of their scale. If the predictors are not standardized, variables with larger scales, such as income measured in thousands, may dominate the penalty term, causing their coefficients to be penalized more heavily and shrunk disproportionately toward zero. Conversely, predictors with smaller scales, such as percentages, might be penalized less and retained in the model even if they are less important.

Standardization ensures that all predictors are on the same scale by centering each variable to have a mean of zero and scaling it to have a standard deviation of one. This equalizes the influence of the predictors on the penalty term, making the LASSO regression fair in its treatment of variables. Without standardization, the model’s variable selection process may be biased, leading to suboptimal or misleading results.

Moreover, standardization aligns the predictors with the normalization typically applied to the residual sum of squares in LASSO, particularly in versions of the optimization problem that use scaling by \(1/n\) or \(1/2n\). This consistency makes the results of LASSO more interpretable and ensures that comparisons across predictors and models are meaningful.

After standardizing the predictors and using the scaled version of the LASSO optimization problem, we encounter a key characteristic of Lasso regression: it does not have a closed-form solution. Unlike Ridge or OLS regression, where the estimated coefficients \(\hat{\beta}\) can be directly computed using an explicit formula, Lasso introduces an additional layer of complexity.

For Ridge and OLS regression, the closed-form solution allows us to plug the data \(X, \gamma\) and the regularization parameter \(\lambda\) into a formula to compute the coefficients in a single step. However, Lasso regression applies an \(L1\) penalty, which is the sum of the absolute values of the coefficients. This absolute value term disrupts the straightforward derivation of an explicit formula for the Lasso solution \(\hat{\beta}_{Lasso}\), making it impossible to solve directly in one step.

To estimate the Lasso coefficients, we rely on iterative optimization algorithms. These algorithms progressively refine the solution until convergence. Two commonly used algorithms for solving the Lasso optimization problem are Coordinate Descent and Least Angle Regression (LARS). While both methods can solve the Lasso problem, they differ in their approach. Coordinate descent focuses on iterative, localized updates to each coefficient, while LARS takes a more global approach by navigating along a solution path that optimally adjusts multiple coefficients in response to residual correlations. Despite its utility in certain contexts, LARS is less commonly used in practice compared to coordinate descent, which has become the standard for most Lasso applications due to its versatility and practical performance. Among these, coordinate descent stands out as the preferred algorithm in modern applications.

12.2.1 Coordinate Descent for Lasso Regression

The concept of using coordinate descent for Lasso regression was initially introduced by Fu (1998) and later explored by Daubechies et al. (2004). However, it gained significant popularity following its implementation in the glmnet package by Friedman et al. (2007, 2010). Coordinate Descent can be applied as a convex optimization method when the convex function can be written as a separable function with two components: one that is convex and smooth, and another that is convex but non-smooth. Specifically, the Lasso loss function comprises two additive components. The first component, the residual sum of squares, is convex, differentiable, and smooth. The second component of the loss term ,\(\sum_{j=1}^{p} |\beta_j|\), is convex but non-differentiable.

The Lasso solution is not closed-form (a closed-form solution is an explicit mathematical expression that can be computed directly) like Ridge and OLS, but it can be written as:

\[\begin{equation} \hat{\beta}_k^{LASSO} = \frac{1}{n} \left[ \sum_{i=1}^{n} x_{ik} \left( y_i - \sum_{j \neq k} \tilde{\beta}_j x_{ij} \right) - \lambda \right]_+ \end{equation}\]

where \([z]_+\) denotes the soft-thresholding operator, which sets \(\hat{\beta}_k\) to zero if the penalization term \(\lambda\) is too large compared to the residual correlation. This is why Lasso can shrink some coefficients exactly to zero.

The coordinate descent algorithm iteratively updates each coefficient one at a time while keeping the others fixed, repeating the process until the solution converges (i.e., the coefficients change minimally between iterations). The initialization process begins by standardizing the predictors \(x_j\) and the response \(y\) to have a mean of zero and a variance of one, ensuring all predictors are on the same scale. The initial regularization parameter, \(\lambda_{\text{max}}\), is the smallest value of \(\lambda\) at which all coefficients are zero. As \(\lambda\) decreases geometrically from \(\lambda_{\text{max}}\) to \(\lambda_{\text{min}}\), predictors sequentially enter the model based on their correlation strength, allowing the algorithm to explore the regularization path.

Now, let us discuss each step of the algorithm in detail to calculate coefficients \(\beta_j\) in the first iteration. Initially, all coefficients are set to zero, and the first step begins with the residual calculation.

In the first iteration, the residual is simply the response variable, \(r = y\), because no predictor contributions are included (all coefficients are zero). For each coefficient \(\beta_j\), the algorithm computes the correlation between the predictor \(x_j\) and \(r = y\). Using this correlation, the coefficient is updated using the soft-thresholding operator:

\[\begin{equation} \beta_j \leftarrow S\left(\frac{1}{n} \sum_{i=1}^n x_{ij} r_i, \lambda_{\text{max}}\right), \end{equation}\]

where the soft-thresholding operator is defined as:

\[\begin{equation} S(z, \lambda) = \text{sign}(z) \cdot \max(|z| - \lambda, 0). \end{equation}\]

Here, \(z= \frac{1}{n} \sum_{i=1}^n x_{ij} r_i\) represents the scaled correlation between the predictor \(x_j\) and the response \(y\), while \(\lambda_{\text{max}}\) acts as a threshold. If this correlation is smaller than \(\lambda_{\text{max}}\), the coefficient is set to zero, indicating that the predictor \(x_j\) does not enter the model in this iteration. Otherwise, \(\beta_j\) takes a nonzero value, reflecting its contribution to the model.(If \(|\frac{1}{n} \sum_{i=1}^n x_{i1} r_i| \leq \lambda\): The coefficient \(\beta_1\) is set to zero, meaning \(x_1\) is excluded from the model. If \(|\frac{1}{n} \sum_{i=1}^n x_{i1} r_i| > \lambda\): The coefficient \(\beta_1\) is updated to a nonzero value which is the scaled correlation between the predictor and the response.

The initial regularization parameter \(\lambda_{\text{max}}\) is calculated as the largest value of \(\lambda\) at which all coefficients are zero. It is defined as:

\(\lambda_{\text{max}} = \max_j \left| \frac{1}{n} \sum_{i=1}^n x_{ij} y_i \right|.\)

This value ensures that the penalty is large enough to suppress all predictors, serving as the starting point for the algorithm. After calculating \(\lambda_{\text{max}}\), the algorithm proceeds to compute coefficients for all predictors \(\beta_1, \beta_2, \dots, \beta_n\) using the same residual \(r = y\) and the soft-thresholding operator.

At the end of the first iteration, some coefficients may remain zero if their correlation with \(y\) is insufficient to overcome the threshold set by \(\lambda_{\text{max}}\). The result is an initial sparse solution, where only strongly correlated predictors have nonzero coefficients.

In subsequent iterations, the regularization parameter \(\lambda\) is decreased according to the sequence:

\(\lambda_k = \lambda_{\text{max}} \times \left(\frac{\lambda_{\text{min}}}{\lambda_{\text{max}}}\right)^{\frac{k-1}{\text{nlambda} - 1}},\) where \(\lambda_{\text{min}}\) is a fraction of \(\lambda_{\text{max}}\), controlled by \(\text{lambda.min.ratio}\) (default: 0.0001 for \(p \leq n\) or 0.01 for \(p > n\)), and \(\text{nlambda}\) is the number of values in the sequence (default: 100).

As \(\lambda\) decreases, the residual is recalculated to incorporate contributions from nonzero coefficients from the previous step as a “warm start”:

\[\begin{equation} r = y - \sum_{j=1}^p x_j \beta_j^{\text{(previous)}} \end{equation}\]

This updated residual reflects the remaining variation in \(y\) after accounting for predictors already included in the model. For each predictor, the coefficient is updated iteratively using the soft-thresholding operator with the new \(\lambda_k\):

\[\begin{equation} \beta_j \leftarrow S\left(\frac{1}{n} \sum_{i=1}^n x_{ij} r_i, \lambda_k\right) \end{equation}\]

The process continues iteratively for all coefficients and all values of \(\lambda_k\), progressively building the regularization path.

The algorithm stops when the coefficients converge, as determined by a stopping criterion. Convergence is achieved when the relative change in the coefficients between iterations falls below a predefined tolerance level: \(\frac{\|\beta^{(t)} - \beta^{(t-1)}\|}{\|\beta^{(t-1)}\|} < \text{tolerance}.\) The default tolerance in glmnet is \(10^{-4}\), but it can be adjusted using the \(\text{thresh}\) parameter. This ensures that the algorithm produces a stable and reliable solution for each value of \(\lambda_k\).

The coordinate descent method is particularly effective for Lasso regression due to its efficiency and suitability for high-dimensional data. By updating coefficients one at a time through an iterative process, it remains computationally efficient even with large numbers of predictors. The method leverages the soft-thresholding rule to identify the most relevant predictors while shrinking others to zero, producing a sparse solution that balances model complexity and predictive accuracy. Although coordinate descent delivers solutions for specific \(\lambda\) values rather than the entire solution path, its simplicity, efficiency, and adaptability make it a practical tool for Lasso and other regularization methods like Elastic Net, especially in data analysis where direct solutions are unavailable.

12.2.2 Least Angle Regression (LARS) for Lasso

Least Angle Regression (LARS) incrementally builds the solution path by sequentially adding predictors and adjusting coefficients based on their correlation with the residuals. It is particularly efficient when the number of predictors (\(\rho\)) is large, making it a valuable tool for understanding the full solution path of Lasso regression. While LARS was originally designed for linear regression, it can be modified to solve the Lasso problem by introducing a Lasso constraint.

The LARS algorithm begins with all coefficients initialized to zero, ensuring that no predictors are included in the model initially. The predictors \(x_j\) and the response \(y\) are first standardized to have mean zero and variance one, ensuring all predictors are on the same scale. Once standardized, the coefficients are set to zero, and the residuals are initialized as \(r = y\). At this stage, the algorithm proceeds to identify the most correlated predictor by computing the correlation between each predictor \(x_j\) and the residuals \(r\) as \(c_j = x_j^T r\). The predictor with the highest absolute correlation, \(x_k\), is selected since it has the strongest relationship with the unexplained variance in \(y\).

After selecting the most correlated predictor, the algorithm moves along the least angle direction, which ensures that the residuals are minimized in the direction of \(x_k\). The term “least angle regression” refers to the property of moving in the direction that forms the smallest angle with the residual vector. To achieve this, the active set of predictors is defined, initially containing only \(x_k\). The active predictors, represented as \(X_A\), and their corresponding coefficients, \(\beta_A\), are used to calculate the direction vector \(u_A = X_A (X_A^T X_A)^{-1} s_A\), where \(s_A = \text{sign}(X_A^T r)\) captures the signs of the correlations of the active predictors with the residuals. The algorithm then computes the step size \(\gamma\) to determine how far to move along this direction before another predictor becomes equally correlated with the residuals: \[ \gamma = \min_{j \notin A} \left\{ \frac{C - c_j}{1 - a_j}, \frac{C + c_j}{1 + a_j} \right\}, \] where \(C = \max_j |c_j|\) is the maximum absolute correlation, \(c_j = x_j^T r\) is the correlation of predictor \(x_j\) with the residuals, and \(a_j = x_j^T u_A\) is the projection of \(x_j\) onto the direction vector \(u_A\). The coefficients of the active predictors are updated as \(\beta_A \leftarrow \beta_A + \gamma u_A\), and the residuals are recalculated as \(r \leftarrow r - \gamma u_A\). If another predictor becomes equally correlated with the residuals, it is added to the active set, and the process is repeated.

To calculate the initial value of \(\lambda\), which determines the Lasso constraint, the algorithm computes the maximum absolute correlation of the predictors with the response variable. This value, referred to as \(\lambda_{\text{max}}\), is given by: \(\lambda_{\text{max}} = \max_j |x_j^T y|\), where \(x_j^T y\) represents the correlation of predictor \(x_j\) with the response \(y\). This ensures that at \(\lambda = \lambda_{\text{max}}\), all coefficients are exactly zero, providing a starting point for the solution path. The corresponding threshold \(t\) for the sum of absolute coefficients is initialized as \(t = 0\) since no predictors are active at this stage.

To enforce the Lasso constraint during the algorithm, the sum of the absolute values of the coefficients, denoted as \(S = \sum_{j=1}^p |\beta_j|\), is monitored. When this sum exceeds the threshold determined by the regularization parameter \(\lambda\), it triggers a re-scaling of the coefficients to ensure compliance with the Lasso constraint. The value of \(\delta\), representing the amount of shrinkage required, is calculated as: \(\delta = \frac{S - t}{|A|},\) where \(|A|\) is the number of active predictors, i.e., predictors with non-zero coefficients. The coefficients are then updated as: \[ \beta_j \leftarrow \text{sign}(\beta_j) \cdot \max(0, |\beta_j| - \delta), \] which proportionally reduces the magnitude of the coefficients and sets some coefficients to zero if their absolute value is less than \(\delta\). This ensures that the total sum of the coefficients satisfies the constraint \(S \leq t\). In practical terms, \(\lambda\) determines the degree of regularization: higher values of \(\lambda\) correspond to more stringent constraints (smaller \(t\)), which shrink coefficients more aggressively and encourage sparsity. Conversely, as \(\lambda\) decreases, \(t\) increases, allowing more coefficients to take non-zero values and thus introducing additional predictors into the model.

The LARS algorithm updates the solution path by iteratively adding new predictors to the active set and moving their coefficients in the least angle direction while maintaining the Lasso constraint. This process continues until all predictors are included or the regularization parameter \(\lambda\) reaches zero. The algorithm produces the entire solution path, which tracks how coefficients evolve as \(\lambda\) decreases. Convergence is achieved when the coefficients stabilize, ensuring a reliable solution for each value of \(\lambda\).

A key feature of LARS is its efficiency in computing the solution path for Lasso, providing valuable insights into the effects of regularization on model selection and sparsity. The threshold imposed on the sum of the absolute values of the coefficients is directly linked to \(\lambda\), and as \(\lambda\) decreases, the threshold increases, allowing more predictors to enter the model and their coefficients to grow. By adapting the standard LARS algorithm with the Lasso constraint, the method ensures a sparse and interpretable solution, making it a powerful tool for addressing high-dimensional regression problems.

12.2.3 Differences Between Coordinate Descent and LARS

Here are the main differences between Coordinate Descent and LARS in their approach to solving Lasso regression problems. First, Coordinate Descent uses an iterative optimization approach where each coefficient is updated one at a time while keeping the others fixed. This is done using the soft-thresholding operator applied to each predictor individually. In contrast, LARS builds the entire solution path in a geometric manner, incrementally adding predictors to the active set and updating coefficients of all active predictors simultaneously.

Second, in enforcing the Lasso constraint, Coordinate Descent uses implicit soft-thresholding to shrink coefficients. This approach ensures sparsity by setting coefficients to zero when their absolute value falls below the penalization threshold. The update for each coefficient in Coordinate Descent is performed as:

\[ \beta_j \leftarrow \text{sign}\left( \frac{1}{n} \sum_{i=1}^n x_{ij} r_i \right) \cdot \max\left(0, \left| \frac{1}{n} \sum_{i=1}^n x_{ij} r_i \right| - \lambda \right) \]

On the other hand, LARS explicitly monitors the sum of absolute coefficients, denoted as \(S = \sum_{j=1}^p |\beta_j|\), and ensures that this sum does not exceed a threshold \(t\) determined by the regularization parameter \(\lambda\). When \(S\) exceeds \(t\), LARS applies proportional shrinkage across all active coefficients to restore compliance with the constraint. The amount of shrinkage, \(\delta\), is calculated as: \(\delta = \frac{S - t}{|A|},\) where \(|A|\) is the number of active predictors. The coefficients are then updated as:

\[ \beta_j \leftarrow \text{sign}(\beta_j) \cdot \max(0, |\beta_j| - \delta). \]

This explicit enforcement ensures that the total sum of the absolute coefficients satisfies the constraint \(S \leq t\), while selectively shrinking or zeroing out coefficients that fail to meet the penalization criteria.

Third, the selection of predictors differs significantly. Coordinate Descent cycles through all predictors sequentially and updates each one iteratively until convergence. In contrast, LARS selects the predictor most correlated with the residuals at each step and dynamically adds it to the active set when its correlation equals that of the current active predictors.

Fourth, Coordinate Descent does not build the entire solution path but instead computes solutions for specific values of \(\lambda\). On the other hand, LARS explicitly constructs the entire solution path, showing how coefficients evolve as \(\lambda\) decreases.

Fifth, in terms of computational efficiency, Coordinate Descent is more scalable for high-dimensional datasets (\(p \gg n\)), as it updates one coefficient at a time without requiring matrix operations. LARS, while efficient for small to moderate datasets (\(p \leq n\)), involves matrix inversions for updating active predictors, which can be computationally intensive for large \(p\).

Sixth, LARS can suffer from numerical instability in the presence of highly collinear predictors due to its reliance on matrix operations. Coordinate Descent, by avoiding matrix inversion, is more stable in such scenarios.

Finally, the key use cases differ. Coordinate Descent is better suited for scenarios where the focus is on high-dimensional data or when solutions for specific \(\lambda\) values are needed, such as in cross-validation. LARS is more appropriate for moderate-sized datasets where understanding the full regularization path and predictor selection process is important.

12.2.4 Implementation and Simulation of Lasso Regression

To begin the Lasso regression simulation, the first step is Data Preparation. This involves centering and standardizing the predictor variables to ensure that all features are on the same scale, allowing the Lasso penalty to be applied consistently across them. Additionally, if the intercept term is omitted from the model, the response variable should also be centered to maintain consistency with the standardized predictors.

Next is the Parameter Selection process. Here, we define a grid of \(\lambda\) values to explore. To determine the optimal \(\lambda\), \(k\)-fold cross-validation is employed. This method evaluates the model’s performance for each value of \(\lambda\) by dividing the data into training and validation sets multiple times. The \(\lambda\) that minimizes the cross-validation error is chosen as the optimal regularization parameter.

Once the optimal \(\lambda\) has been determined, the Model Fitting step begins. Using the selected \(\lambda\), the Lasso regression coefficients are computed by applying the regularization penalty. Unlike Ridge regression, Lasso can shrink some coefficients exactly to zero, making it an effective method for feature selection.

Finally, in the Model Evaluation phase, the predictive performance of the Lasso regression model is assessed. This can be done by evaluating its performance on a separate test set or through additional cross-validation. The goal is to compare the Lasso regression model’s ability to generalize to new data and analyze which variables were selected by the model.

In the following Lasso regression simulation in R, we generate synthetic data, fit a Lasso regression model using the glmnet package, and evaluate its performance by comparing it to ordinary least squares (OLS) results. This step-by-step approach demonstrates how Lasso regression can be applied in practice to perform variable selection and improve predictive accuracy.

# Load the required package

library(glmnet)

# Step 1: Data Preparation

set.seed(123) # For reproducibility

n <- 100 # Number of observations

p <- 10 # Number of predictors

X <- matrix(rnorm(n * p), nrow = n, ncol = p) # Generate random predictors

beta <- c(3, -2, 0, 0, 1.5, 0, 0, 0, 0, 0) # True coefficients

y <- X %*% beta + rnorm(n) # Generate response with some noise

# Split data into training and testing sets

set.seed(456)

train_idx <- sample(1:n, size = 0.8 * n, replace = FALSE) # 80% training data

X_train <- X[train_idx, ]

X_test <- X[-train_idx, ]

y_train <- y[train_idx]

y_test <- y[-train_idx]

# Step 3: Fit a Lasso regression model

# Lasso regression with alpha = 1 (L1 regularization)

lasso_model <- glmnet(X_train, y_train, alpha = 1)

# Step 4: Cross-validation to find the best lambda

cv_lasso <- cv.glmnet(X_train, y_train, alpha = 1)

# Step 5: Get the best lambda value

best_lambda <- cv_lasso$lambda.min

cat("Best lambda for Lasso regression:", best_lambda, "\n")## Best lambda for Lasso regression: 0.1020998# Step 6: Fit the final Lasso model with the best lambda

lasso_final <- glmnet(X_train, y_train, alpha = 1, lambda = best_lambda)

# Step 7: Make predictions on the test set

y_pred_lasso <- predict(lasso_final, s = best_lambda, newx = X_test)

# Step 8: Evaluate the model performance

mse_lasso <- mean((y_test - y_pred_lasso)^2)

cat("Mean Squared Error for Lasso regression on test set:", mse_lasso, "\n")## Mean Squared Error for Lasso regression on test set: 1.19065# Step 9: Compare with Ordinary Least Squares (OLS)

ols_model <- lm(y_train ~ X_train)

y_pred_ols <- predict(ols_model, newdata = data.frame(X_train = X_test))

mse_ols <- mean((y_test - y_pred_ols)^2)

cat("Mean Squared Error for OLS on test set:", mse_ols, "\n")## Mean Squared Error for OLS on test set: 34.29426

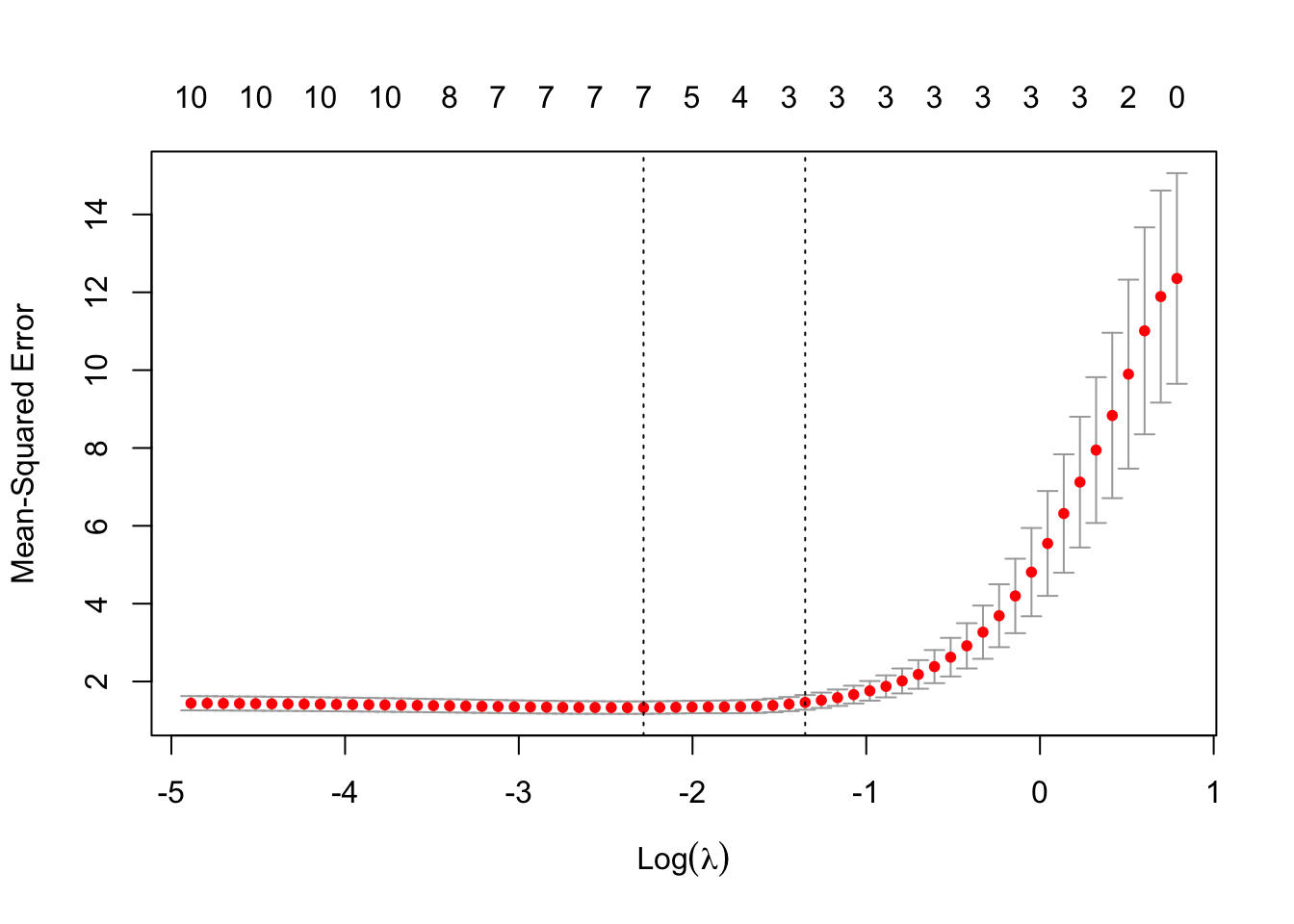

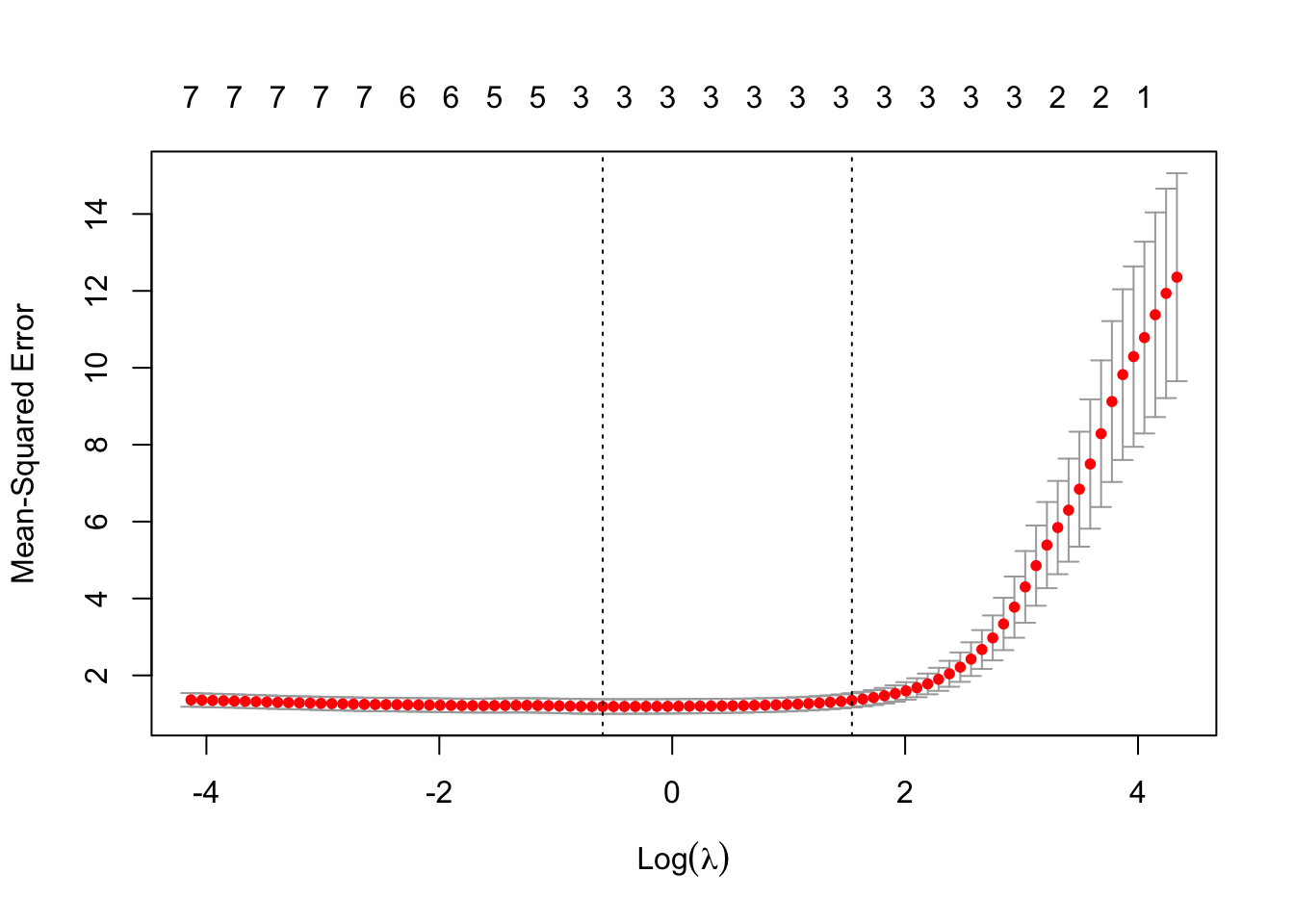

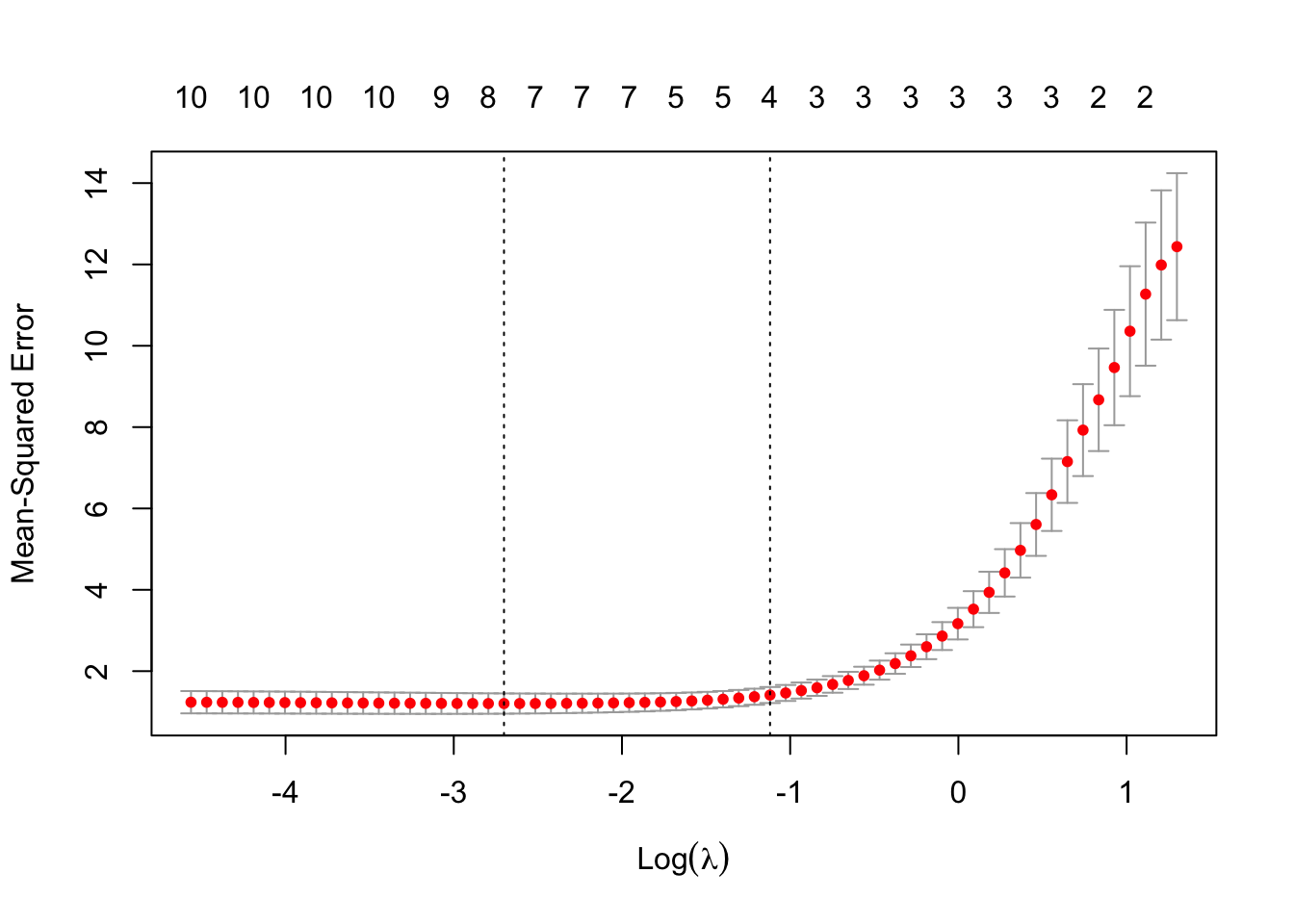

FIGURE 12.2: Cross-validation results for LASSO regression: optimal lambda selection

Data Generation: We begin by generating a synthetic dataset to simulate the application of Lasso regression. The dataset consists of 100 observations and 10 predictors (\(X\)), generated as random normal variables. The response variable (\(y\)) is defined as a linear combination of a subset of predictors with predefined coefficients. Additional random noise is added to \(y\) to reflect real-world variability. This setup allows us to test Lasso regression’s ability to identify relevant predictors and reduce the impact of irrelevant ones.

Train-Test Split: The dataset is split into training and testing subsets to evaluate the model’s performance on unseen data. Specifically, 80% of the data (80 observations) is used for training, and the remaining 20% is held out for testing. The split ensures that the model is trained on one subset and validated on another, mimicking real-world scenarios where future data is not available during model fitting.

Fitting Lasso Regression: The

glmnet()function is used to fit the Lasso regression model on the training data. By settingalpha = 1, we specify Lasso regression, which applies an \(L1\) penalty to the coefficients. This penalty encourages sparsity by shrinking irrelevant coefficients to zero. The fitting process employs coordinate descent, an iterative optimization algorithm. At each iteration, one coefficient is updated while holding others fixed, using the soft-thresholding operator as explained above.Cross-Validation: To determine the optimal value of \(\lambda\), we use the

cv.glmnet()function to perform 10-fold cross-validation. The training dataset is divided into 10 equally sized folds. In each fold, 90% of the data is used for training, and the remaining 10% serves as the validation set. For each fold, the model is trained on a predefined sequence of \(\lambda\) values, ranging from \(\lambda_{\text{max}}\) (the largest \(\lambda\) at which all coefficients are zero) to a minimum value \(\lambda_{\text{min}}\), with a default of 100 \(\lambda\) values in total (as discussed above, we explained how \(\lambda_{\text{max}}\) and the other \(\lambda\) values are calculated). The cross-validation error (e.g., Mean Squared Error) is computed for each \(\lambda\) as the average validation error across all folds:\[ \text{CV Error}(\lambda) = \frac{1}{k} \sum_{i=1}^k \text{MSE}_i(\lambda) \] where \(k = 10\) is the number of folds, and \({MSE}_i(\lambda)\) is the validation error for fold \(i\).

The optimal \(\lambda\) (i.e. the minimum \(\lambda\)) is the value of \(\lambda\) that minimizes the average cross-validation error. Additionally, a less regularized \(\lambda\), \(\lambda_{\text{1SE}}\), may also be selected. This is the largest \(\lambda\) within one standard error of the minimum error, favoring simpler models.

Final Lasso Model: Using the optimal \(\lambda\) obtained from cross-validation, we fit the final Lasso model on the training data. This step ensures that the model incorporates the most relevant predictors while penalizing irrelevant ones. Predictions are then made on the test set using the final model.

Model Evaluation: The Mean Squared Error (MSE) is calculated on the test set to evaluate the predictive performance of the Lasso regression model: